In our previous post, Why Lab Scheduling breaks – And How Dynamic Replanning Fixes It, we laid the groundwork for understanding scheduling in automated life sciences labs—what it is, why it’s complex, and the trade-offs between dynamic and static scheduling.

At Automata, we chose a third, best-of-both-worlds approach: dynamic replanning static scheduling, leveraging powerful constraint solvers to quickly replan schedules when reality diverges from expectation.

As a reminder, a scheduler takes a defined workflow—essentially a series of lab tasks linked by timing constraints and resource dependencies—and generates a precise plan, assigning each task to instruments along a timeline. A dynamic replanning scheduler goes further, initially creating an optimized static schedule but also intelligently adapting in real time when actual execution differs from the planned timeline, avoiding issues like deadlocks or resource conflicts. If you’re new to these concepts or want more detail, check out our previous post.

In this article, we’ll zoom in on the key features and approaches that elevate a scheduler from merely functional to truly exceptional. We’ll outline some of the design principals that drove our scheduler design, some of the challenges we encountered in development, and the resulting features that we settled on. Just “having a scheduler” isn’t enough; the specifics of how it works dramatically impact user experience, lab convenience, and overall efficiency.

We’ll dive deeper into these essential features that have driven Automata’s scheduler development:

- Flexibility in articulating a workflow as input.

- Clear data format of scheduled workflow as output.

- Scheduling beyond instrument tasks.

- Rich instrument integration.

- Powerful error handling.

- Robust and flexible planning infrastructure.

Let’s jump in.

Flexibility in articulating a workflow as input.

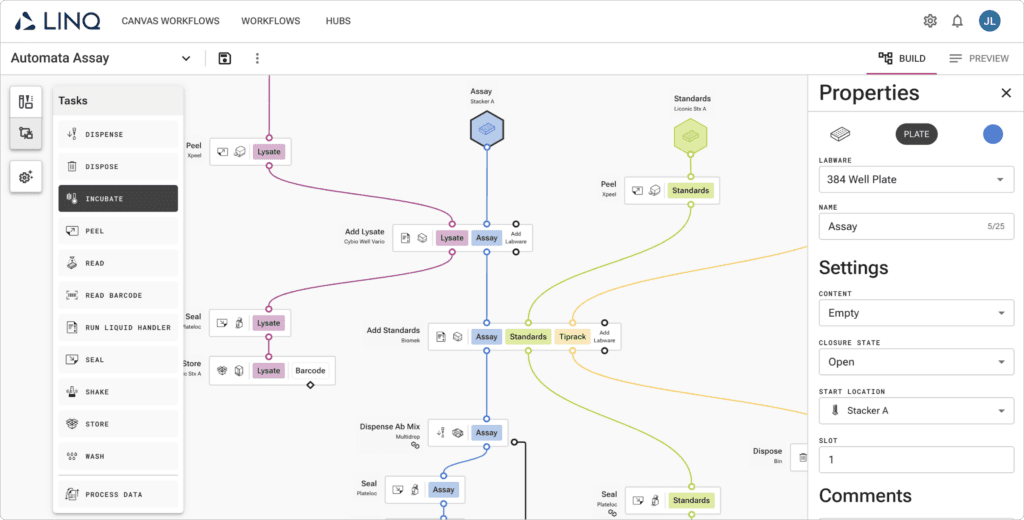

Not everyone interacts with scheduling software in the same way—and that’s okay. Some users prefer an intuitive graphical user interface (GUI), while others lean toward scripting for greater flexibility and control. Recognizing these diverse needs, we built our scheduling system to accommodate both seamlessly.

For users who prefer a visual approach, we offer Visual Canvas, a user-friendly GUI designed for clarity and ease of use. Visual Canvas enables you to intuitively drag and drop tasks, link dependencies, and clearly visualize workflow constraints—all without sacrificing the complexity or expressiveness that sophisticated workflows require. This intuitive interface doesn’t just make building workflows easy; it also makes them maintainable, shareable, and straightforward to version. Proper GUI-based versioning is particularly valuable in collaborative lab environments, where multiple users might iterate or refine a workflow over time.

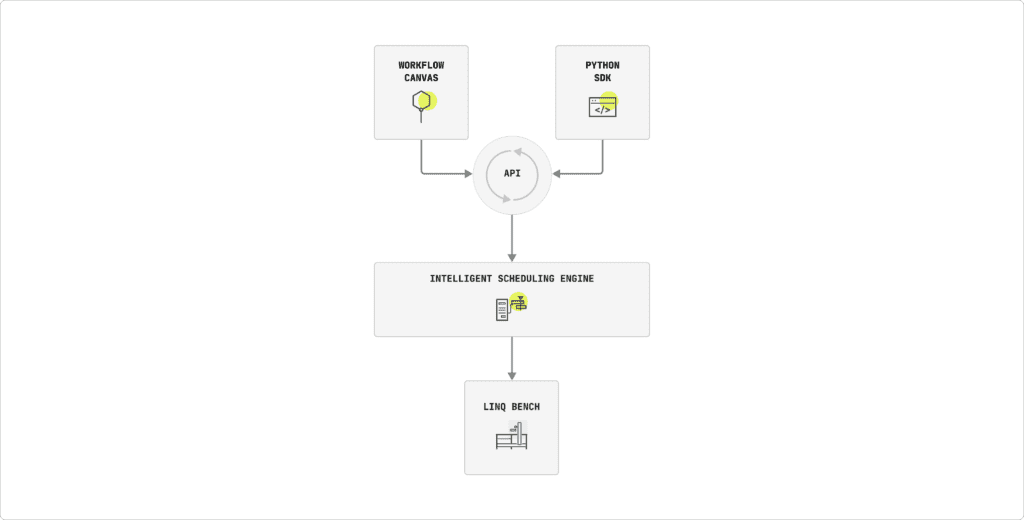

On the other side of the spectrum, developers or power users who prefer scripting have access to our robust Python SDK. The SDK provides precise programmatic control to dynamically generate, manipulate, and validate complex workflows through clear and concise Python code. This is especially powerful in environments where workflows might be dynamically assembled on the fly based on external conditions or incoming requests—something a purely GUI-driven approach wouldn’t be able to manage-but a business logic layer or user application can easily handle with standard API-driven data integrations.

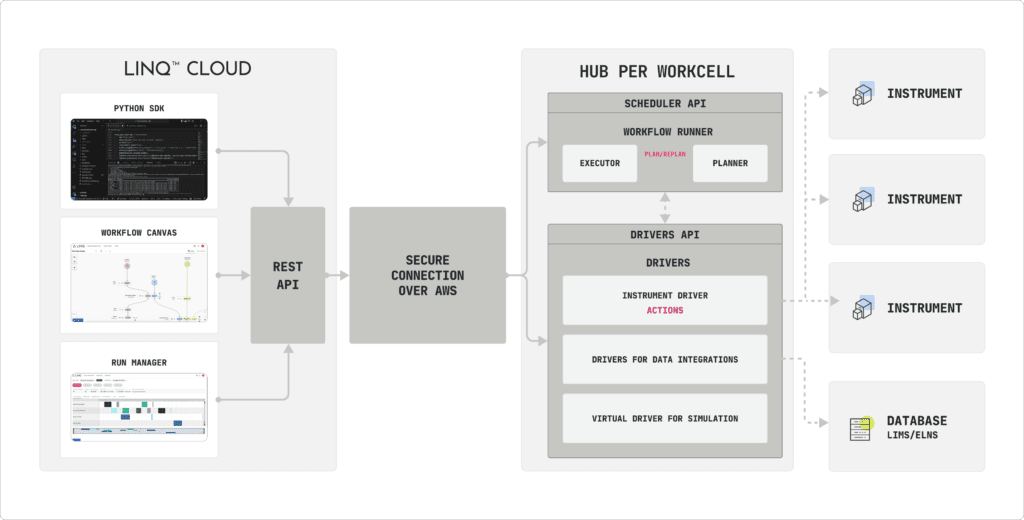

Crucially, both Visual Canvas and our Python SDK interface directly with the same underlying API, ensuring consistency, interoperability, and ease of transition between GUI-based and code-based approaches. This unifying architecture guarantees that a workflow created visually can be seamlessly accessed, validated, modified, or even automated via scripts, and vice versa.

The driving principle behind this flexibility is simple: articulating workflows clearly, efficiently, and powerfully shouldn’t force users to compromise on usability or complexity. By carefully balancing visual ease-of-use with the robustness of scriptable control, our scheduler ensures that users across the spectrum—from scientists to developers—can articulate, validate, and manage their workflows in the manner that best fits their preferences and use cases.

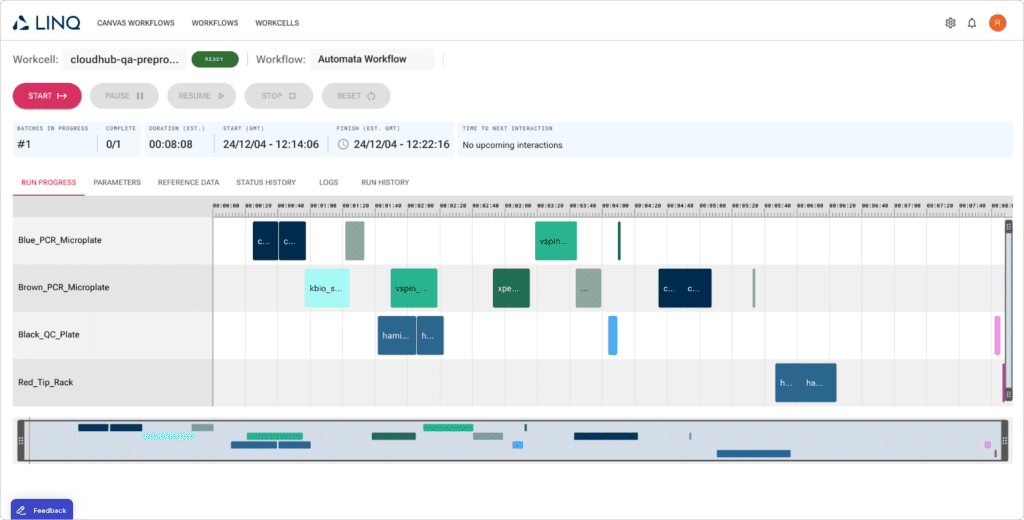

Clear data format of scheduled workflow as output.

A workflow isn’t fully interpretable until it’s been scheduled—only then can you truly visualize and grasp how tasks interrelate in real time. The clearest manifestation of this is the familiar Gantt chart, a visualization of task timelines, instrument allocations, and workflow dependencies, laid out explicitly across the time domain.

First, it enables our internal visualization tools—like the Visual Canvas and the runtime control GUI—to render detailed and insightful visualizations. Operators and workflow developers benefit directly: a clear visualization significantly improves their ability to interpret, validate, and optimize workflows at a glance. It also helps to quickly identify potential bottlenecks or resource conflicts long before they become problematic. In a sense, this can be seen as simulation – a solved schedule is really just an in-silico representation of what a workflow will do on hardware.

Second, the standardized output format is essential for downstream developer applications. By returning scheduling results in a structured, predictable form (in this case JSON via the LINQ API), external tools or scripts can independently parse, analyze, validate, or visualize the scheduled workflows. This independence allows labs and developers the flexibility to build custom integrations, reporting tools, or even automated validation layers tailored specifically to their needs.

By providing scheduling results in a universally interpretable format, we open the door to robust, versatile integration to empower labs with more visibility and greater control over their automation stack.

Creating this schedule is a non-trivial problem—commonly referred to as a combinatorial optimization problem—since multiple tasks may run in parallel, and numerous resources, timinsg, and ordering constraints must be considered. The core discussion at hand here is how schedulers get to a solution.

It can’t be overstated how computationally complex this “flexible job shop scheduling” problem can be, and it’s this complexity that leads to different approaches to scheduling—that’s why we have an entire team of devs dedicated to our scheduler here at Automata!

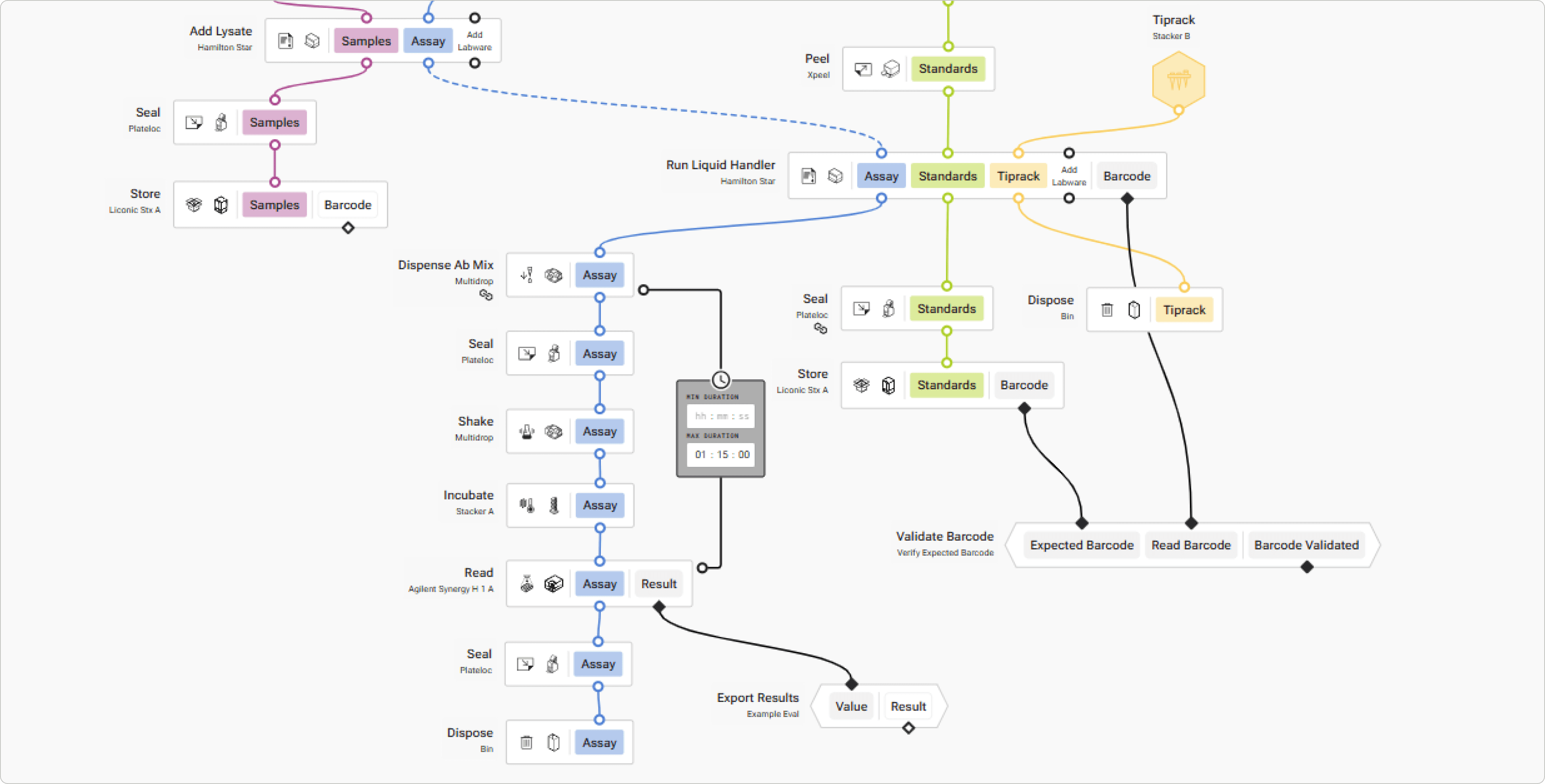

Scheduling beyond instrument tasks.

In automated lab environments, a workflow goes far beyond just instrument operations. Equally critical are the tasks operators perform—loading samples, unloading plates, inspecting instruments, cleaning equipment, or resolving errors—and the various steps executed in software, such as data formatting, external system calls, or waiting on external results. Each of these tasks has direct implications for workflow success and timing, making them just as important to schedule precisely.

Recognizing this reality, we’ve designed our scheduler to treat these non-instrument tasks as first-class citizens. This means they have all the same scheduling capabilities and constraints as instrument tasks: you can define precise dependencies, set explicit time constraints, and integrate them explicitly into your workflow’s dependency graph. No longer are these vital operational tasks left as vague, untracked “manual interventions” or “code stuff that just happens” – instead, they’re clearly scheduled, tracked, and accounted for, to ensure maximum visibility and predictability across the entire workflow.

Moreover, since outcomes of these non-instrument tasks can impact the workflow’s direction—such as passing or failing a quality check, or successfully retrieving external data—our scheduler explicitly supports conditional workflow execution. Tasks can dynamically alter the flow based on real-time evaluations or external inputs, triggering entirely different workflow paths as needed.

This ability pairs naturally with our dynamic replanning scheduler: if a condition resolves at runtime in an unexpected way, we immediately and automatically replan the workflow. By seamlessly integrating conditional execution and dynamic replanning, our scheduler ensures that your workflow remains both resilient and flexible—even in the face of real-world unpredictability.

In this way, features begin to build on top of each other, and it becomes clear how a scheduler can be more or less powerful. Dynamic replanning not only increases reliability, but allows conditional execution to even work in the first place, in a predictable, simulatable, and easy to develop manner. This is impossible with a purely static scheduler or a fully dynamic one. Again, see the previous blog post for a more detailed breakdown.

Rich instrument integration.

Not all instrument APIs are created equal. Instruments differ significantly in how they’re controlled: some drivers expose detailed, low-level functionality, others provide high-level abstractions, some rely on modern protocols, while others are constrained by legacy connections—or may even lack formal drivers entirely. Navigating this diversity can be challenging, but getting it right is crucial for workflow reliability and ease of use.

At Automata, our philosophy has been to maximize the capabilities provided by each instrument’s API, regardless of its complexity or age. Wherever possible, we tap into the deepest level of control that an instrument’s API permits, to enable precise, robust integration into your workflows. To make these integrations practical, we also develop useful, optional abstractions on top of the raw instrument commands.

For example, consider integrating a large-capacity plate hotel. At the lowest level, controlling it might involve multiple discrete driver commands: rotate the central column, move a plate to a parking bay, open the hotel door, extend the bay, and so on. For most workflow developers or operators, these individual actions are unnecessarily complex and can obscure the workflow logic. Thus, a key part of our driver design is to abstract away these granular tasks into higher-level, intuitive commands—such as simply instructing the scheduler to “Get plate in slot 65.”

This combination of rich, low-level instrument control and user-friendly abstraction ensures workflows are both powerful and accessible. It also allows our scheduler to intelligently optimize execution, as it clearly understands each instrument’s capabilities and limitations.

For a more detailed exploration of our approach to driver design, and to understand what makes a truly great instrument driver, be sure to read our dedicated Driver Deep Dive, coming next in the series.

For many with experience with purely static schedulers, this single pitfall is enough to make any static scheduling approach infeasible. So why does Automata choose static scheduling?

Powerful error handling.

Workflows rarely run perfectly from start to finish—things inevitably go wrong. Operators accidentally bump transport robots, instruments experience temporary connection drops, or consumables unexpectedly run out mid-process. Because automation needs to be resilient, we designed our scheduler around the assumption that errors are inevitable, and rapid, intuitive resolution is crucial.

Dynamic replanning is at the heart of how we manage this inevitability. Because our scheduler can quickly adjust workflows on the fly, it inherently supports robust error handling. But powerful replanning alone isn’t enough. Errors must be communicated clearly, resolved quickly, and handled flexibly.

To that end, we’ve built error handling deeply into our scheduler, accessible via multiple channels:

- Operator-driven resolution: Errors can be resolved by lab operators from anywhere securely via our cloud-based GUI. This means that when an error occurs, authorized users receive clear notifications and can immediately respond from a web browser, tablet, or even mobile device. This secure, authenticated remote access ensures minimal downtime, even when operators aren’t physically in the lab.

- Programmatic resolution via API: Errors aren’t confined to the GUI—they’re fully accessible programmatically through our comprehensive API. This means your scripts, CLI tools, or custom-developed applications can retrieve, interrogate, and automatically or manually respond to errors. This approach allows labs to create custom automation logic tailored precisely to their own operational needs.

We support a variety of powerful resolution options, empowering users to handle real-world errors flexibly and intelligently. A few examples include:

- Skip: Imagine an operator accidentally nudging a transport robot, causing a sample to drop or miss a step. The operator can manually place the sample onto its next step and instruct the scheduler to skip the failed task. Dynamic replanning ensures the rest of the workflow smoothly adapts, continuing execution without interruption or confusion.

- Retry: Instruments occasionally falter—network hiccups, consumables run dry (like tape in a microplate sealer), or instruments temporarily go offline. After addressing the issue (reconnecting an instrument or loading new consumables), the operator simply instructs the scheduler to retry the affected task. The workflow seamlessly resumes, dynamically adjusting the timing and downstream tasks accordingly.

- Abort Labware: Sometimes labware or samples become compromised—perhaps an accidental collision ruins a critical sample. The operator can select “Abort Labware,” instructing the scheduler to completely remove that compromised labware from subsequent scheduled steps. Critically, dynamic replanning ensures that the workflow continues smoothly for unaffected samples, maintaining maximum throughput and preventing a single error from derailing an entire run.

These examples represent just a few of the powerful error-handling capabilities our dynamic replanning scheduler enables. The common theme is flexibility: no matter the scenario, we strive to ensure workflows remain robust, adaptable, and minimally impacted by real-world unpredictability. By thoughtfully combining intuitive human interfaces, programmatic error management, and rapid dynamic replanning, we’ve transformed workflow error handling from a source of frustration into a source of strength and reliability.

For instance, imagine a microplate sealer runs slower than predicted. The scheduler detects a delay, updates the known run time, and triggers a re-solve. If we think intuitively, like a human and not like a computer, this can be mathematically easy. We shift all uncompleted bars on the Gantt chart forward, and take a look at time constraints to see if they are still respected. In a similar, but much more mathematically complex way, OR-Tools reuses much of its previous solve on every resolve. The workflow can keep moving without lengthy downtime or the risk of constraints being violated due to out-of-date plans.

Robust and flexible planning infrastructure.

Even the most sophisticated scheduler is only as effective as the infrastructure that supports it which is why we’ve deliberately designed our infrastructure to maximize flexibility, reliability, and performance. A truly robust scheduling platform needs to handle not just one-off schedules, but rapid replanning, multiple concurrent users, diverse instrument drivers, and evolving codebases—all in a scalable manner. Here’s how we achieved this:

Modular invocation in the cloud

First, our scheduler runs as modular, cloud-native containers, decoupled entirely from the physical lab workcells. This decoupling has two critical advantages:

- On-demand Scalability: Because the scheduler infrastructure is containerized and hosted in the cloud, we can instantly spin up additional scheduler instances to accommodate as many simultaneous workflow developers as needed. This modularity ensures rapid response times, smooth collaboration, and unparalleled scalability.

- Independent Development: Workflow developers can create, simulate, and validate workflows independently of the physical workcell. You don’t need to wait for hardware to be free or available; you can rapidly iterate workflows anywhere, anytime, securely in the cloud.

Versioning and containerization:

We also prioritized thorough versioning. Every new feature release and scheduler update is preserved as a separate, clearly labeled version, so whenever scheduling is requested, any version of the scheduler can be invoked. This approach—combined with our containerized deployment—enables remarkable flexibility:

- Simultaneous multi-version support: Any scheduler version (current or past) can be spun up on demand. This means labs can maintain validated workflows tied to a specific scheduler release, ensuring consistency and regulatory compliance, while simultaneously allowing new workflows to be developed or tested against newer scheduler versions with the latest features.

- Instrument driver versioning: Similarly, instrument driver versions are meticulously versioned and containerized. Labs can test and validate workflows against specific combinations of scheduler and driver versions, facilitating precise troubleshooting, reproducibility, and seamless version migration.

In practice, this means that two workflows can run back-to-back on the same workcell with completely different scheduling algorithms and even device drivers.

Efficient execution: OR-Tools and dynamic replanning

At the core of our rapid, flexible scheduling is Google’s OR-Tools, an open-source optimization toolkit developed specifically to solve complex combinatorial scheduling problems with extraordinary speed and flexibility. OR-Tools utilizes advanced algorithms—particularly constraint programming and mixed-integer optimization—to efficiently tackle scheduling challenges.

Specifically, OR-Tools’ powerful CP-SAT solver allows rapid initial solving of complex schedules, even for highly constrained workflows with numerous tasks, instruments, and timing interdependencies. But where OR-Tools really shines—and the reason we chose it—is its extraordinary efficiency at incremental solving. Incremental solving allows OR-Tools to quickly recalculate schedules using the previous solution as a starting point, significantly speeding up replanning when real-world deviations occur.

Here’s how this practically impacts workflow execution:

- Near-instant dynamic replanning: When a workflow encounters an unexpected event—like an instrument delay or operator intervention—OR-Tools rapidly recalculates the workflow schedule in fractions of a second, ensuring minimal disruption.

- Offline replanning capability: This fast replanning is critical for offline scenarios. Our scheduler seamlessly adapts workflows dynamically even without cloud connectivity, enabling uninterrupted lab operations. Replanning must be able to occur offline on the workcell – connectivity certainly cannot be guaranteed.

- Efficient use of resources: The computational efficiency of OR-Tools means we don’t need excessive compute resources for replanning, keeping operational costs and infrastructure complexity low.

In short, our investment in a robust and flexible planning infrastructure—powered by modular cloud deployment, precise versioning, and the efficiency of OR-Tools—ensures our scheduler is powerful enough to handle the most demanding lab environments, yet flexible enough to adapt as your lab’s needs grow and evolve.

Conclusion

All schedulers are not created equal. The difference is in clarity, flexibility, reliability, user experience, and adaptability in the face of real-world complexities.

At Automata, our goal is simple: empower labs to achieve unprecedented automation reliability and efficiency, reducing frustration and increasing throughput. We’ve carefully built our dynamic replanning scheduler around these foundational principles, to provide powerful and intuitive ways to articulate workflows through both graphical and programmatic means, while ensuring the clarity of our scheduler outputs for seamless downstream integration. We recognize that robust scheduling goes beyond merely instrument tasks, fully incorporating critical operator and software-based activities into the workflow with equal precision. Our rich instrument integration philosophy ensures deep, useful control across diverse lab equipment, and our comprehensive error handling transforms inevitable disruptions into manageable events. All this is powered by a carefully engineered infrastructure that leverages cloud-native modularity, meticulous versioning, and Google’s OR-Tools for blazing-fast dynamic replanning.

If you’re curious how our scheduling solutions could fit into your automation stack, or if you’d like more details on anything we’ve discussed, please reach out—we’d be excited to connect and show you what’s possible.